April 23, 2024

EECS Professor Sayeef Salahuddin and alumnus Kevin Kornegay elected lifetime fellows of the American Association for the Advancement of Science

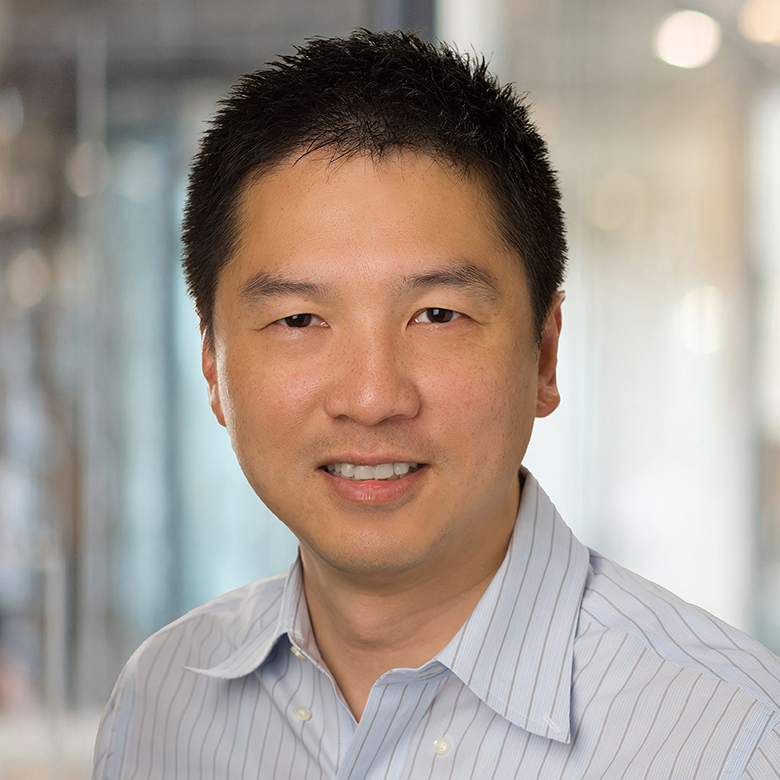

Sayeef Salahuddin, TSMC Distinguished professor of Electrical Engineering and Computer Sciences, has been elected as a lifetime fellow of the American Association for the Advancement of Science (AAAS). He was recognized “for distinguished contributions to electronic device science and engineering, in particular for inventing negative capacitance devices with potential for…